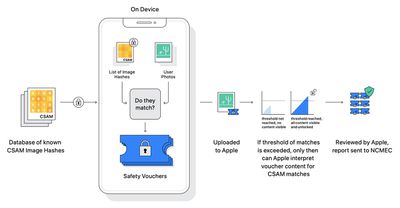

Apple this week announced that, starting later this year with iOS 15 and iPadOS 15, the company will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children, a non-profit organization that works in collaboration with law enforcement agencies across the United States.

The plans have sparked concerns among some security researchers and other parties that Apple could eventually be forced by governments to add non-CSAM images to the hash list for nefarious purposes, such as to suppress political activism.

"No matter how well-intentioned, Apple is rolling out mass surveillance to the entire world with this," said prominent whistleblower Edward Snowden, adding that "if they can scan for kiddie porn today, they can scan for anything tomorrow." The non-profit Electronic Frontier Foundation also criticized Apple's plans, stating that "even a thoroughly documented, carefully thought-out, and narrowly-scoped backdoor is still a backdoor."

To address these concerns, Apple provided additional commentary about its plans today.

Apple's known CSAM detection system will be limited to the United States at launch, and to address the potential for some governments to try to abuse the system, Apple confirmed to MacRumors that the company will consider any potential global expansion of the system on a country-by-country basis after conducting a legal evaluation. Apple did not provide a timeframe for global expansion of the system, if such a move ever happens.

Apple also addressed the hypothetical possibility of a particular region in the world deciding to corrupt a safety organization in an attempt to abuse the system, noting that the system's first layer of protection is an undisclosed threshold before a user is flagged for having inappropriate imagery. Even if the threshold is exceeded, Apple said its manual review process would serve as an additional barrier and confirm the absence of known CSAM imagery. Apple said it would ultimately not report the flagged user to NCMEC or law enforcement agencies and that the system would still be working exactly as designed.

Apple also highlighted some proponents of the system, with some parties praising the company for its efforts to fight child abuse.

"We support the continued evolution of Apple's approach to child online safety," said Stephen Balkam, CEO of the Family Online Safety Institute. "Given the challenges parents face in protecting their kids online, it is imperative that tech companies continuously iterate and improve their safety tools to respond to new risks and actual harms."

Apple did admit that there is no silver bullet answer as it relates to the potential of the system being abused, but the company said it is committed to using the system solely for known CSAM imagery detection.

Note: Due to the political or social nature of the discussion regarding this topic, the discussion thread is located in our Political News forum. All forum members and site visitors are welcome to read and follow the thread, but posting is limited to forum members with at least 100 posts.